Lesson 11: NLP Pipelines

NLP Pipeline

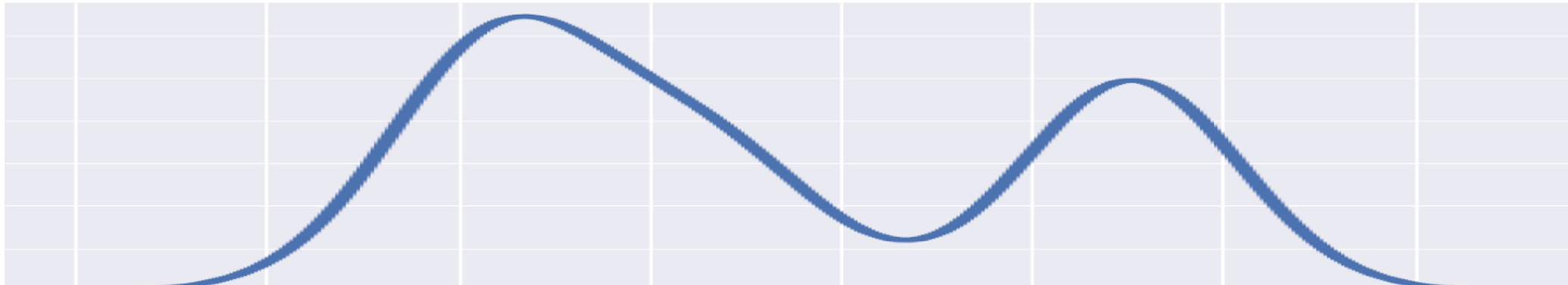

An NLP pipeline consists of the sequential steps needed to achieve an NLP task. The following steps are usually used in building NLP applications:

Solving an NLP task requires sufficient amount of text data, hence you need to have access to the data, prepare the data, and extract numerical features (vector representations) from the text through encoding or word embedding. The numerical features are then used by algorithms for modeling and evaluation. A model with a satisfactory performance is deployed to production. The performance of the model in production is monitored and the model is continuously updated to keep it from degrading.

Data Access

Imagine you are a data scientist in a company that provides Home Phone Services. You have the task of building a chatbot system that detects whether an inquiry is about New Services, Appointment, Internet and Repair Support, Billing or Something Else, then routes the inquiry to the appropriate team. Historic queries that were handled by the various teams can be used to build an ML chatbot system. The queries were also labeled using the category of the query such as New Services, Appointment, etc.

In situations where there is insufficient data for building an ML chatbot, you could look at the pattern in the historic queries available, that indicate the category of the query. Pattern matching rule can then be used to separate queries in to various categories.

If the data is small and we need to use ML techniques for NLP, then we could consider using suitable customer support public text data or data from web scraping. One limitation of external data is that, the data may be different from the queries in production. More data can be collected but this can be time consuming.

Data augmentation techniques such as synonym replacement (replacing words with their synonyms), bigram flipping (reverse the order of words in bigram), entity replacement (replace entities such as locations, names of people, etc), adding noise to the data such as replacing words with other words that have similar spellings. Libraries such as NLPAug could be used to implement some of these data augmentation techniques.

Text Preparation

Text preparation is a vital aspect of NLP and involves text cleaning and text normalization. Text preparation allows text data to be transformed into a form that is useful for building an NLP model. Text cleaning and normalization tasks used in a specific NLP project depends on the requirements and nature of the project.

Text cleaning is the transformation of text into a more meaningful representation. Text cleaning typically involves the following:

Text normalization is the transformation of text into a standard or consistent format, making it easier to process and analyze. Text normalization tasks usually include the following:

Feature Extraction

Feature extraction in NLP involves transforming text data into it’s numerical form suitable for the algorithm used to train the model. The transformation of text data into its numerical or vector representation is called encoding or vectorization. The representation of text units (words, sentences, documents, etc) as vectors in a common vector space is called a vector space model.

There are various vector representation approaches that allow us to represent text in a numerical or machine-readable form, ranging from basic to state-of-the-art approaches. Basic vector representation approaches include one-hot encoding, bag of words, bag of n-grams, TF-IDF while advanced vector representations include word embeddings such as Word2vec and GloVe.

A good text representation should capture the linguistic properties of a text such as the semantics (meaning) and syntactic (grammatical) structure of the text.

Text data represented as vectors in a vector space is then used for NLP tasks such as text classification, information retrieval, text clustering, topic modeling, content-based recommendation system, sentiment analysis, etc.

Modeling

NLP models used to achieve NLP tasks could be heuristic or machine learning models. Heuristic model are hard-coded logical rule. For example, a rule-based spam filter could be created with a pre-defined list of words likely to be found in spam email. A pattern-based rule could be created to search for a specific pattern in a text. A pattern could be specified to search for dates and phone numbers in an entity extraction task.

While a rule-based model is a good starting point, adding an ML model as the data increases is much efficient since ML models require larger data and building more complex rules to handle larger data can be costly. Sometimes, the heuristic rules can be used to generate new features for the ML model. Also, the number of spam words in an email could be used as a feature for an ML model.

In model building, you could stack models by using the outputs of other models as input into another model. You could also use the ensemble approach where predictions are generated with different models and the final prediction is then determined through a majority vote mechanism. Both model stacking and ensemble could be used in a single NLP task. Transfer learning could also be used by applying pre-trained NLP models to the data especially when you don’t have sufficient data for model training.

Evaluation

Evaluation is a way of accessing the extent to which a project is successful. Model performance metrics and business KPIs are used to measure the success of a NLP project.

A model performance metrics is needed to measure the performance of the model or how good the NLP model is. Model accuracy is an example of a model evaluation metric used to measure model performance. Different model performance metrics might be suitable for different NLP use cases so it is important to select a relevant performance metrics. It is usually a good practice to compare multiple NLP models for each project based on their performance metric, then select the model with the best performance measure or score.

A business KPI is used to measure the overall impact or value of the NLP project. Some KPI’s such as increasing productivity, improving customer experience, increasing revenue or profit by a defined percentage could be used to measure the overall impact of the NLP project. For example, a automated NLP chatbot could increase the productivity of a business by freeing up time so employees can focus on some other tasks instead of responding to customer queries. This could also translate into cost savings, increased profitability and customer satisfaction.

For example, if a customer submits a complain by email, a traditional approach to handling the issue is to have someone analyze the issue and forward it to the appropriate department. Sometimes, the issue may be forwarded to several departments before it arrives the right department to address it. With NLP, an automated ticket support classification system could be built to help predict where the customer issue should be forwarded. This will cut down on response time and likely increase customer satisfaction and loyalty.

Deployment

Just like any other data science, machine learning or AI project, a NLP project is an iterative process. When you have evaluated the model and are satisfied with the performance of the project, the project can be cleaned up, tested and deployed to the production environment.

Monitoring and Contiunous Improvement

When the project is in the production environment, it needs to be continuously monitored and updated when needed. A project in production should be updated when its performance in the production environment is drifting compared to the model’s performance during development. In the absence of ground truth, data drift could be used to determine when to retrain and update the model.

In some situations, if there is sufficient data, the model can be retrained regularly. The retrained model’s performance is usually compared with the performance of the active model, and if the newly trained model is better, it should be used to update the model in production. The output of the model in production should also be monitored to ensure the output is acceptable and reasonable. The NLP pipeline can be automated such that retraining happens automatically, and the model in production is automatically updated if the newly retrained model is better.