Deep Neural Networks in Practice

In this lesson, we will focus on the application of Deep Neural Networks (DNNs) in solving traditional regression and classification problems, along with their use in image classification. We will use the keras or TensorFlow framework to explore how DNNs can be applied to practical problems such as predicting house prices based on various features, forecasting customer churn in a business context, and performing grayscale image classification. By understanding these applications, you’ll gain insight into how DNNs can be leveraged to address real-world challenges across different domains.

Building Artificial Neural Networks in keras

Keras is an interface for solving machine learning problems using deep learning. It is a high-level API of TensorFlow written in Python. TensorFlow is an end-to-end open-source machine learning platform.

import keras

1. model = keras.Sequential()

2. model.add(keras.Input(shape=(2,))) # input layer with two features

3. model.add(keras.layers.Dense(3, activation='relu')) # first hidden layer, input_shape can be passed here alternatively.

4. model.add(keras.layers.Dense(1, activation='sigmoid')) # output layer

5. model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

6. model.fit(X_train, y_train, epochs=5) # fit the model

7. model.evaluate(X_test, y_test) # evaluate the model

8. model.predict(X_test) # use the model for prediction

The following steps are used to build a model in keras.

Initializing the model : The model is initialized using the keras.Sequential() method. This method allows you to build the model layer by layer. A sequential model is simple to use when you are building a feed-forward neural network.Adding the input layers : The input layer is added with keras.Input(shape=(2,)), indicating the model expects input data with two features. Explicitly specifying the input layer is optional: omitting it delays weight initialization until training, while specifying it initializes weights as layers are added.Adding the first hidden layers : The first hidden layer is added using keras.layers.Dense(3, activation='relu'). It has 3 neurons and uses the ReLU activation function. You can add more hidden layers with different activation functions like ReLU, sigmoid, or tanh to increase the model’s capacity for learning.Adding the output layer : The output layer is added with keras.layers.Dense(1, activation=‘sigmoid’), having one node, suitable for binary classification. The sigmoid activation outputs a value between 0 and 1, representing the probability of the input belonging to class 1.Compiling the model : The model is compiled with the model.compile() method. This step specifies the optimizer (Adam), the loss function (binary_crossentropy for binary classification tasks), and the metrics to track (accuracy). Compiling configures the model for training.Fit the Model : The model is trained using the fit() method, with parameters such as batch_size (number of instances per iteration) and epochs (number of times the entire dataset is passed through). Fitting the model adjusts the weights based on the training data (X_train and y_train) over a specified number of epochs.Evaluating the model : The model is evaluated using the .evaluate() method, which calculates the performance on a test dataset.Making predictions with the model : Predictions are made with the .predict() method, generating output based on new input data. For a binary classification, this method outputs predicted probabilities for the test data, which can be further processed (e.g., converting to binary labels using a threshold) to make final predictions.

Deep Neural Network for Predicting House Price

In the following section, we will explore how to use Keras to build a deep neural network for a regression task: house price prediction.

Dataset

Let’s generate some data for house price prediction and split it for model training and evaluation

Code

import numpy as npimport pandas as pdfrom sklearn.model_selection import train_test_split# Generate random data for predicting house prices 42 )# Simulate some features related to house prices = 10000 = np.random.uniform(500 , 5000 , num_samples) # Size of the house in square feet = np.random.randint(1 , 6 , num_samples) # Number of bedrooms = np.random.randint(1 , 4 , num_samples) # Number of bathrooms = np.random.randint(0 , 100 , num_samples) # Age of the house in years # Simulate house prices (target variable) = (square_feet * 150 ) + (num_bedrooms * 50 ) + (num_bathrooms * 200 ) - (age_of_house * 100 ) + np.random.normal(0 , 100 , num_samples)# Create a DataFrame = pd.DataFrame({'square_feet' : square_feet,'num_bedrooms' : num_bedrooms,'num_bathrooms' : num_bathrooms,'age_of_house' : age_of_house,'house_price' : house_price= data[['square_feet' , 'num_bedrooms' , 'num_bathrooms' , 'age_of_house' ]] # Features = data['house_price' ] # Target variable # Split the data into training and testing sets (80% training, 20% testing) = train_test_split(X, y, test_size= 0.2 , random_state= 42 )# Display the data format ({'square_feet' : ' {:.2f} ' ,'house_price' : ' {:.2f} '

0

2185.43

4

3

57

323026.52

1

4778.21

2

3

4

716980.79

2

3793.97

5

1

32

566312.73

3

3193.96

3

2

42

475287.50

4

1202.08

3

3

18

179391.17

Model Building with Keras

Code

import keras42 )= len (X_train.columns)= keras.Sequential()#model.add(keras.Input(shape=(len(X_train.columns),))) 20 , activation= 'relu' , input_shape= (n_features,))) 20 , activation= 'relu' ))1 )) # Output layer (linear activation is the default) compile (optimizer= 'adam' , loss= 'mse' , metrics= [keras.metrics.RootMeanSquaredError()])= 10 , verbose= 0 )

<keras.src.callbacks.History object at 0x3444bfdd0>

Code

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 20) 100

dense_1 (Dense) (None, 20) 420

dense_2 (Dense) (None, 1) 21

=================================================================

Total params: 541 (2.11 KB)

Trainable params: 541 (2.11 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

Model Evaluation

Code

= model.evaluate(X_test, y_test, verbose= 0 ) print (f"MSE Loss: { loss} " , " \n " ,f"RMSE: { rmse} " )

MSE Loss: 51472788.0

RMSE: 7174.45361328125

Making Predictions

Code

= model.predict(X_test, verbose= 0 )print ("First few predictions" , " \n " , y_pred[0 :5 ])

First few predictions

[[300168.06]

[588585.25]

[492338.16]

[105895.5 ]

[461491.78]]

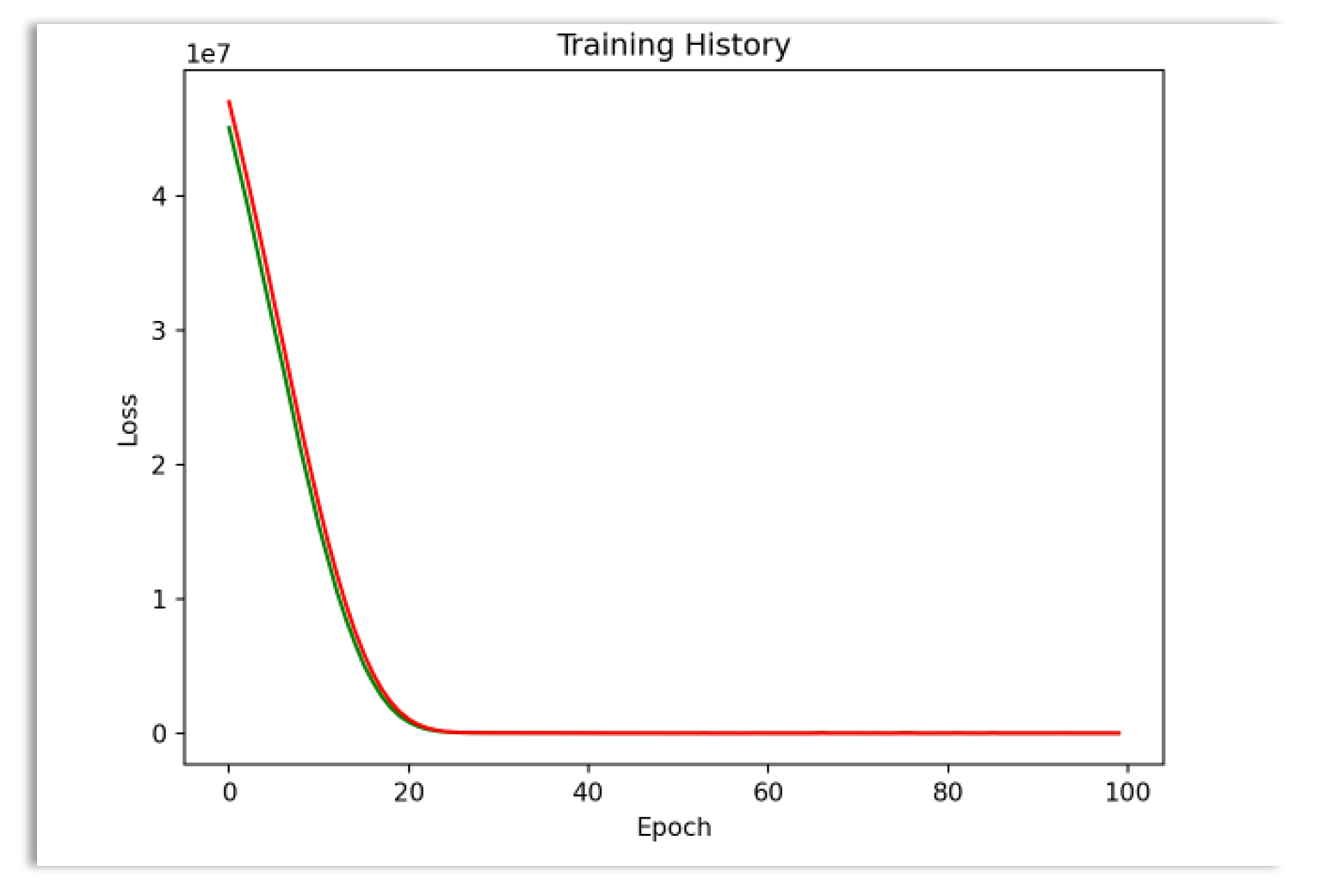

Analysis of Training History

The training history can be inspected to understand the number of epochs that will be optimal for an early stop.

Code

import matplotlib.pyplot as plt= model.fit(X, y, epochs= 100 , verbose= 0 , validation_split= 0.3 )"Training History" )"Epoch" )'Loss' )"val_loss" ], color= "green" )"loss" ], color= "red" );

Deep Neural Network for Predicting Customer Churn

In the following section, we will explore how to use Keras to build a deep neural network for a classification task: predicting customer churn.

Let’s generate some data for customer churn prediction and split it for model training and evaluation.

Code

import numpy as npimport pandas as pdfrom sklearn.model_selection import train_test_split# Generate random data for predicting customer churn 42 )# Simulate some features related to customer churn = 10000 = np.random.randint(18 , 70 , num_samples) # Age of the customer = np.random.uniform(30000 , 150000 , num_samples) # Income of the customer = np.random.randint(1 , 5 , num_samples) # Number of products the customer uses = np.random.randint(0 , 2 , num_samples) # Whether the customer had any complaints (0 or 1) # Simulate churn (target variable: 1 for churn, 0 for no churn) = arr = np.random.choice([0 , 1 ], size= 10000 ) # 1 for churn, 0 for no churn # Create a DataFrame = pd.DataFrame({'age' : age,'income' : income,'num_products' : num_products,'has_complaints' : has_complaints,'churn' : churn= data[['age' , 'income' , 'num_products' , 'has_complaints' ]] # Features = data['churn' ] # Target variable # Split the data into training and testing sets (80% training, 20% testing) = train_test_split(X, y, test_size= 0.2 , random_state= 42 )# Display the data format ({'age' : ' {:.0f} ' ,'income' : ' {:.2f} '

0

56

113031.28

4

1

1

1

69

48702.18

2

0

0

2

46

59213.11

3

0

0

3

32

131063.25

1

1

1

4

60

55116.73

1

0

1

Model Building with Keras

Code

import kerasimport tensorflow as tf1234 )= len (X_train.columns)= keras.Sequential()#model.add(keras.Input(shape=(len(X_train.columns),))) # Input layer with the features 15 , activation= 'relu' , input_shape= (n_features,))) # First hidden layer 15 , activation= 'relu' )) # Second hidden layer 1 , activation= 'sigmoid' )) # Output layer (sigmoid for binary classification) compile (optimizer= 'adam' , loss= 'binary_crossentropy' , metrics= ['accuracy' ])= 10 , verbose= 0 )

<keras.src.callbacks.History object at 0x3461d9f90>

Code

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_3 (Dense) (None, 15) 75

dense_4 (Dense) (None, 15) 240

dense_5 (Dense) (None, 1) 16

=================================================================

Total params: 331 (1.29 KB)

Trainable params: 331 (1.29 KB)

Non-trainable params: 0 (0.00 Byte)

_________________________________________________________________

Model Evaluation

Code

= model.evaluate(X_test, y_test, verbose= 0 ) print (f"Loss: { loss} " , " \n " , f"Accuracy: { accuracy} " )

Loss: 246.32260131835938

Accuracy: 0.48899999260902405

Code

= model.predict(X_test, verbose= 0 )print ("First few predictions (probabilities for churn):" , " \n " , y_pred[0 :5 ])

First few predictions (probabilities for churn):

[[0.]

[0.]

[0.]

[0.]

[0.]]

Deep Neural Network for Image Classification

Deep neural network can be used for image classification or object identification (computer vision).

A digital image consists of a grid of rows and columns, where each cell in the grid is called a pixel. A pixel is the smallest unit of the image and represents a single point in the picture. Each pixel has a color value, which defines its appearance.

For grayscale images, the pixel value is a single number that represents the brightness of the pixel. This value corresponds to a shade of gray, where lower values indicate darker shades, and higher values represent lighter shades.

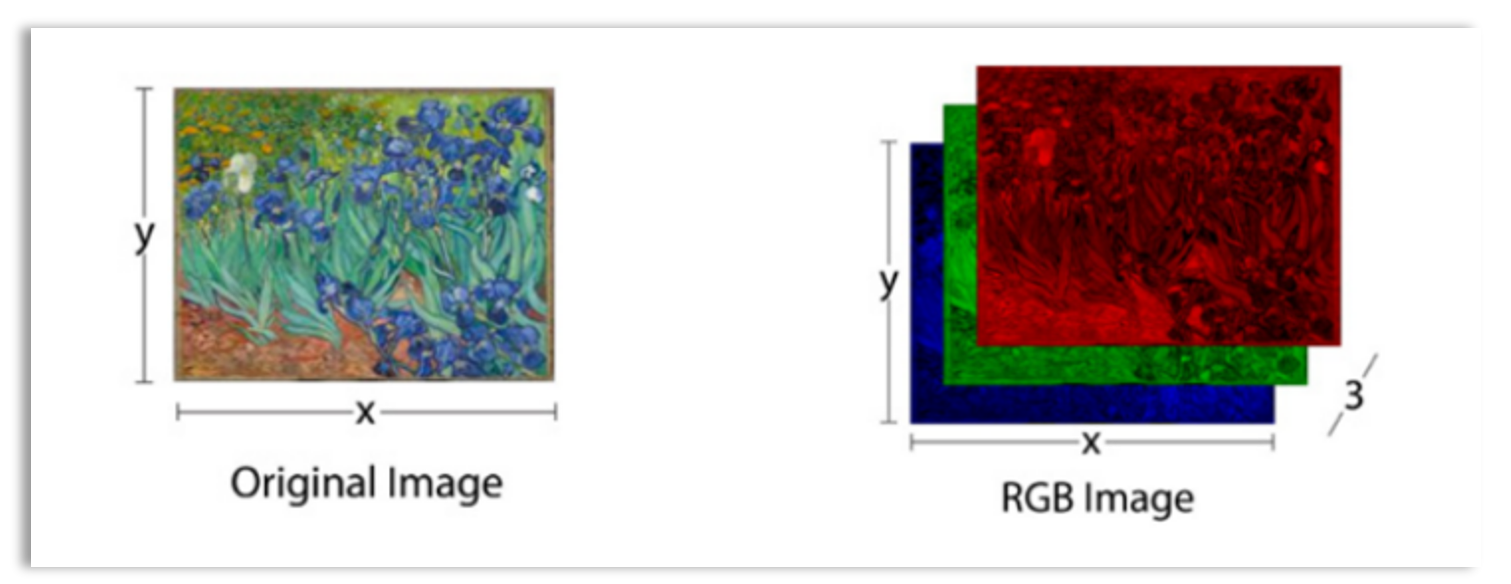

For colored images, each pixel is defined by three color components: red, green, and blue (RGB). Each of these color components is stored as a separate grayscale image, known as a color plane. Together, these three color planes combine to form the full color representation of each pixel in the image.

Pixel values range from 0 to 255, where 0 represents the darkest value (black) and 255 represents the brightest value (white) in the case of grayscale images. To apply algorithms that learn from images, the images must be represented as numerical data, typically in the form of matrices or tensors. These numerical representations allow machine learning models to process and extract patterns from the images.

Matrix and tensor representation of images: Grayscale images

Grayscale images are represented as 3D tensors (equivalent to 3D NumPy arrays). A 3D tensor for a grayscale image has three dimensions:

The first dimension represents the number of samples or images in the dataset.

The second dimension represents the height (number of rows) of the image.

The third dimension represents the width (number of columns) of the image.

Thus, the shape of a grayscale image is represented as (samples, height, width), where samples refers to the number of images, height is the vertical size of the image, and width is the horizontal size of the image.

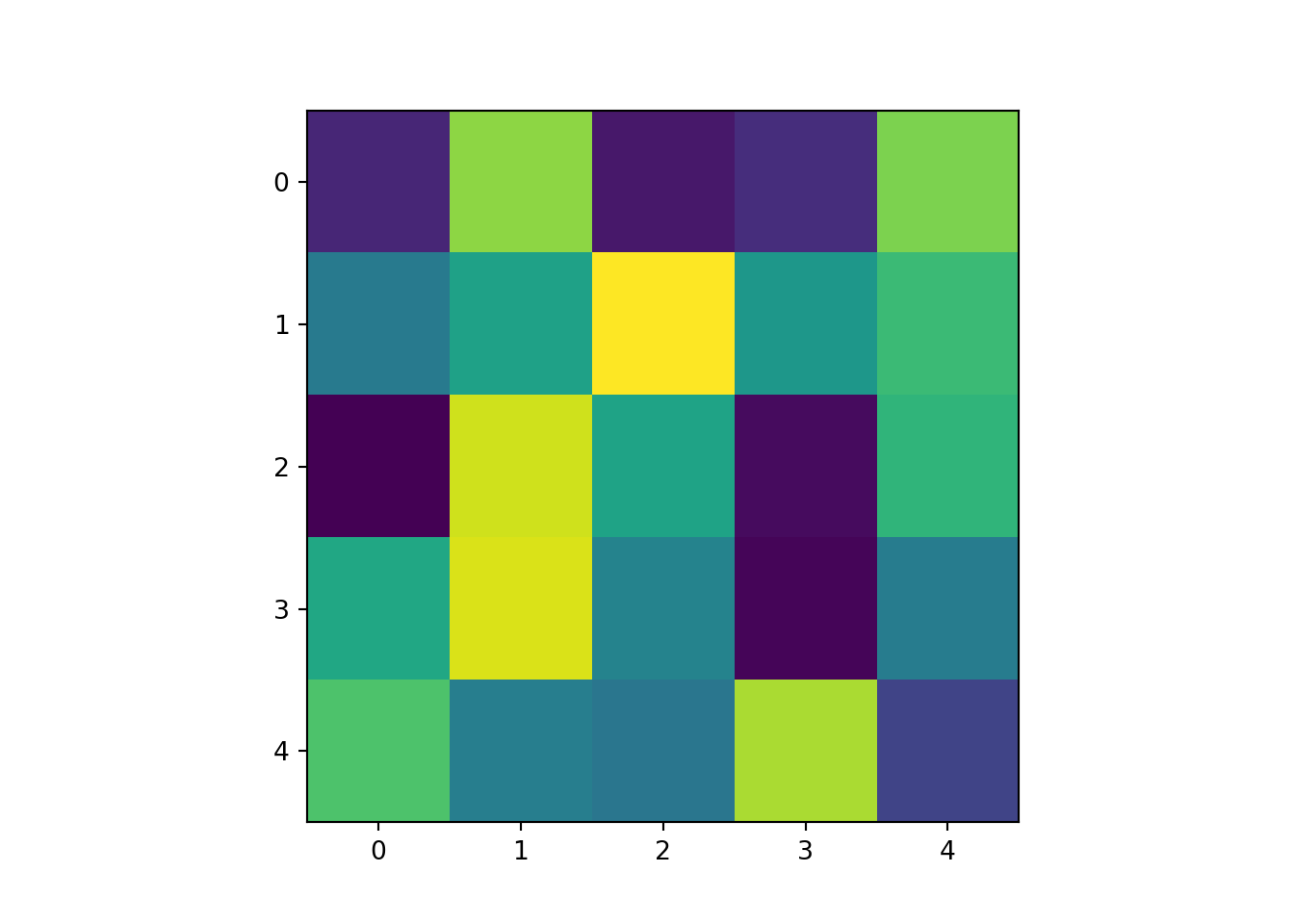

Let’s randomly generate 2 grayscale images with height=5 and width=5.

Code

1234 )= np.random.randint(low= 0 , high= 255 , size= 50 ).reshape(2 , 5 , 5 )

array([[[ 47, 211, 38, 53, 204],

[116, 152, 249, 143, 177],

[ 23, 233, 154, 30, 171],

[158, 236, 124, 26, 118],

[186, 120, 112, 220, 69]],

[[ 80, 201, 127, 246, 254],

[175, 50, 240, 251, 76],

[ 37, 34, 166, 250, 195],

[231, 139, 128, 233, 75],

[ 80, 3, 2, 19, 140]]])

Code

# shape and dimension of image print ("Image shape: " , images.shape, " \n " ,"Image dimension: " , images.ndim)

Image shape: (2, 5, 5)

Image dimension: 3

Below is one of the images.

Code

import matplotlib.pyplot as pltprint (images[0 ])

[[ 47 211 38 53 204]

[116 152 249 143 177]

[ 23 233 154 30 171]

[158 236 124 26 118]

[186 120 112 220 69]]

Code

The 3D image dataset with shape (samples, height, width) can be flattened into a 2D dataset by reshaping each image into a vector. We can reshape the dataset using images.reshape(samples, height * width).

Code

= images.shapeprint ("Samples: " , samples, " \n " ,"Image Height: " , height, " \n " ,"Image Width: " ,width)

Samples: 2

Image Height: 5

Image Width: 5

Code

= images.reshape(samples, height* width)

array([[ 47, 211, 38, 53, 204, 116, 152, 249, 143, 177, 23, 233, 154,

30, 171, 158, 236, 124, 26, 118, 186, 120, 112, 220, 69],

[ 80, 201, 127, 246, 254, 175, 50, 240, 251, 76, 37, 34, 166,

250, 195, 231, 139, 128, 233, 75, 80, 3, 2, 19, 140]])

3D Image Datasets (Grayscale Images)

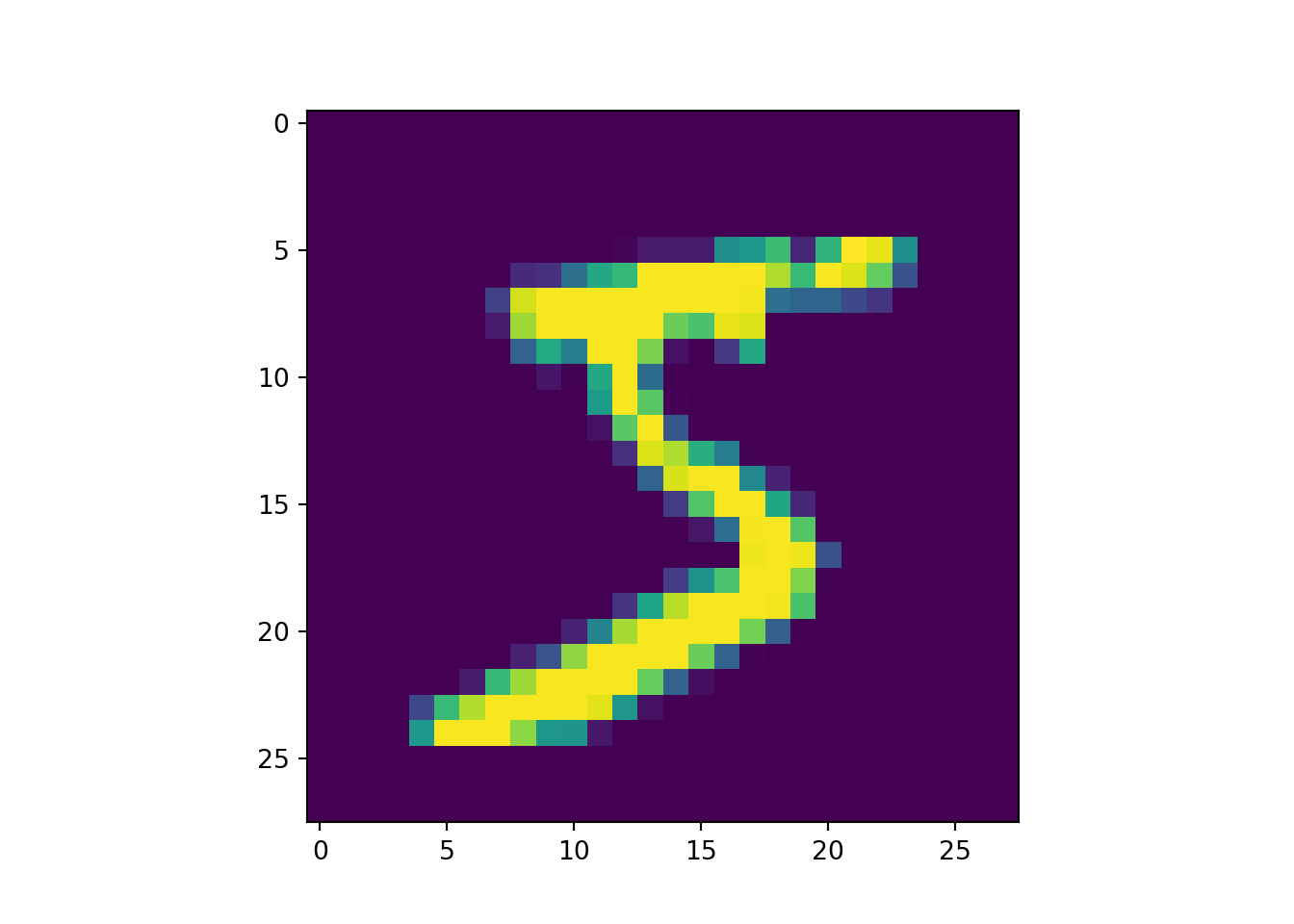

An example of a 3D image dataset is MNIST, which contains 60,000 28x28 grayscale images of the 10 digits, along with a test set of 10,000 images. The MNIST dataset can be loaded using the load_data() function from the keras.datasets module as follows:

Code

from tensorflow.keras.datasets import mnist# Load MNIST dataset = mnist.load_data()# Display shapes of the dataset print (f"Training data shape: { X_train. shape} \n Test data shape: { X_test. shape} " )

Training data shape: (60000, 28, 28)

Test data shape: (10000, 28, 28)

Let’s view the first image in the training set

4D Image Datasets (Grayscale Color Images):

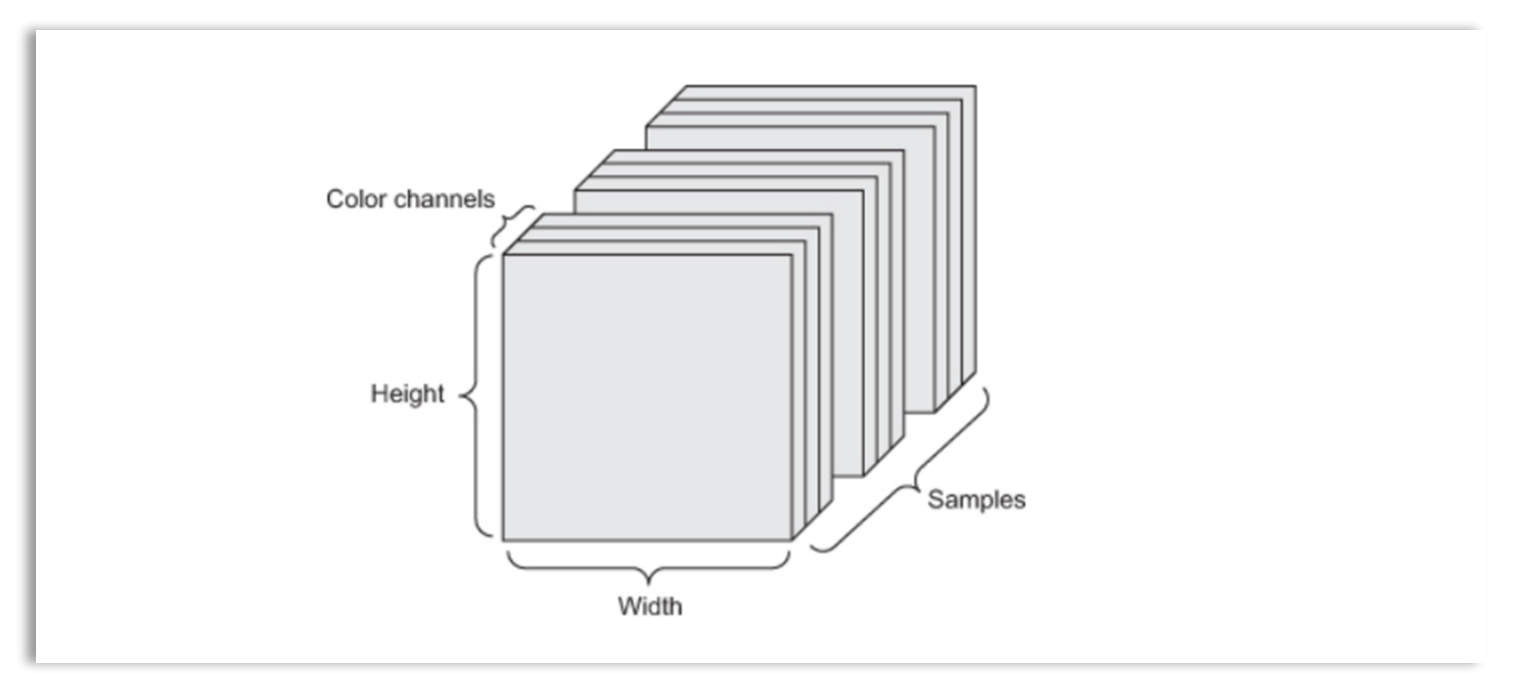

Color scale images are represented as 4D tensors with the shape (samples, height, width, color_depth). Each image has a width and height, with three channels representing the red, green, and blue components. In other words, each colored image consists of three grayscale color planes. A 4D color images can be represented as a matrix or tensor.

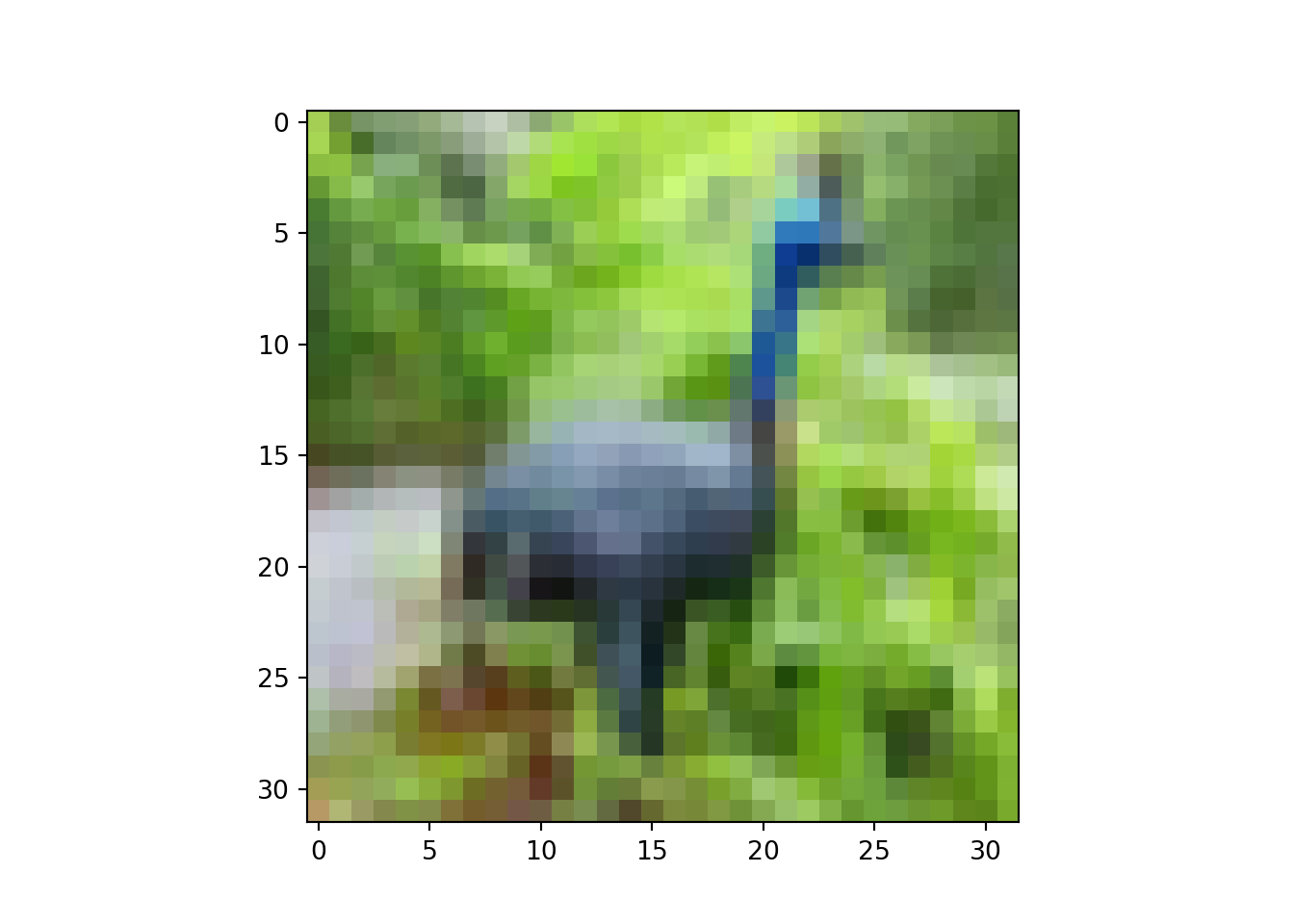

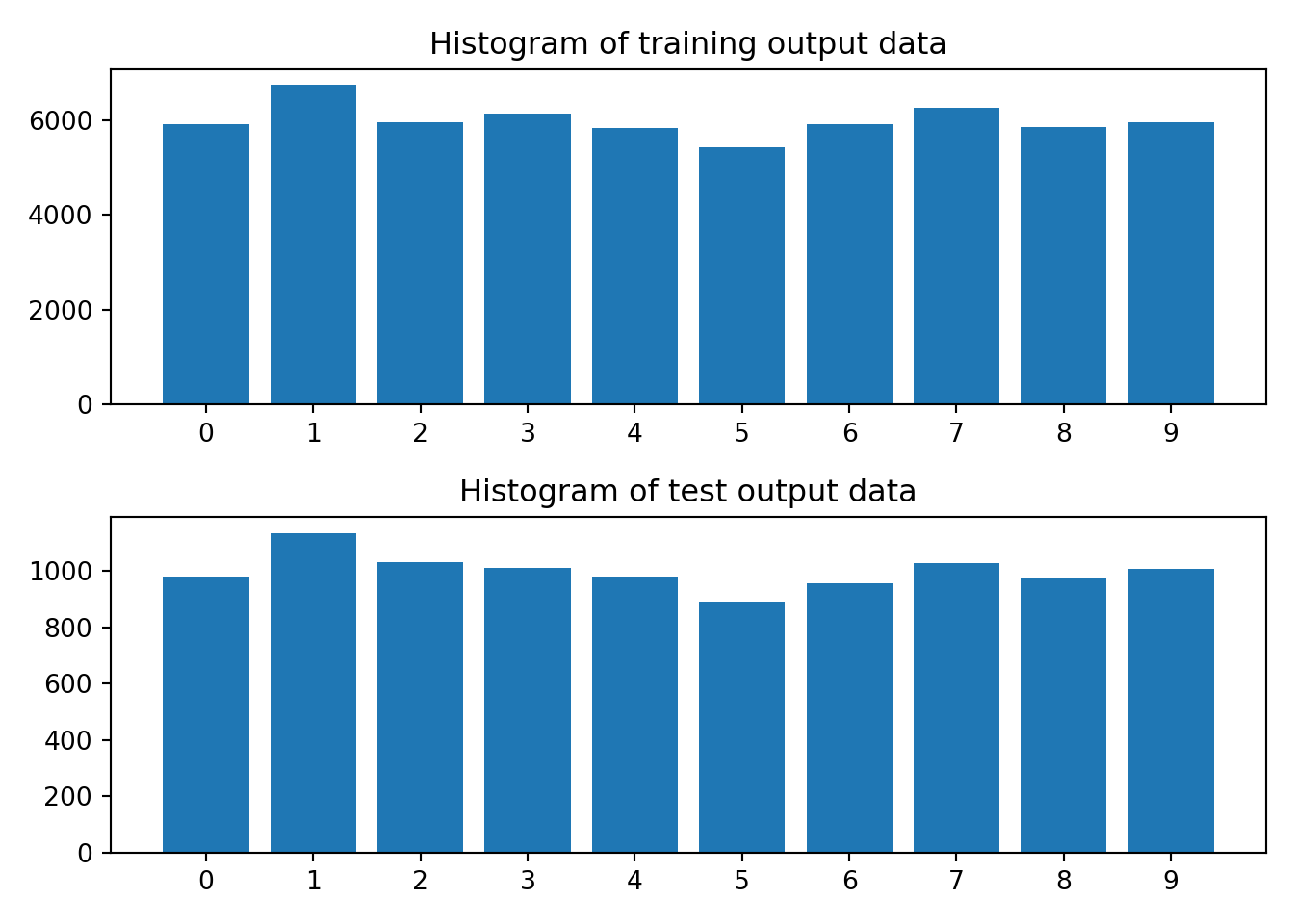

An example of a 4D image dataset is CIFAR-10, which consists of 50,000 32x32 color training images and 10,000 test images, labeled with 10 categories: 0 = airplane; 1 = automobile; 2 = bird; 3 = cat; 4 = deer; 5 = dog; 6 = frog; 7 = horse; 8 = ship; 9 = truck. The CIFAR-10 dataset can be loaded using the .load_data() method from the keras.datasets module..

Code

import ssl# To avoid SSL errors = ssl._create_unverified_context# Load CIFAR-10 dataset from keras.datasets import cifar10= cifar10.load_data()

Downloading data from https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

8192/170498071 [..............................] - ETA: 0s��������������������������������������������������������������

49152/170498071 [..............................] - ETA: 2:54����������������������������������������������������������������

106496/170498071 [..............................] - ETA: 2:50����������������������������������������������������������������

237568/170498071 [..............................] - ETA: 2:22����������������������������������������������������������������

303104/170498071 [..............................] - ETA: 2:19����������������������������������������������������������������

507904/170498071 [..............................] - ETA: 1:48����������������������������������������������������������������

802816/170498071 [..............................] - ETA: 1:25����������������������������������������������������������������

999424/170498071 [..............................] - ETA: 1:17����������������������������������������������������������������

1105920/170498071 [..............................] - ETA: 1:17����������������������������������������������������������������

1269760/170498071 [..............................] - ETA: 1:14����������������������������������������������������������������

1490944/170498071 [..............................] - ETA: 1:12����������������������������������������������������������������

1622016/170498071 [..............................] - ETA: 1:12����������������������������������������������������������������

1818624/170498071 [..............................] - ETA: 1:12����������������������������������������������������������������

1982464/170498071 [..............................] - ETA: 1:10����������������������������������������������������������������

2138112/170498071 [..............................] - ETA: 1:09����������������������������������������������������������������

2154496/170498071 [..............................] - ETA: 1:15����������������������������������������������������������������

2375680/170498071 [..............................] - ETA: 1:13����������������������������������������������������������������

2580480/170498071 [..............................] - ETA: 1:10����������������������������������������������������������������

2809856/170498071 [..............................] - ETA: 1:08����������������������������������������������������������������

3063808/170498071 [..............................] - ETA: 1:05����������������������������������������������������������������

3252224/170498071 [..............................] - ETA: 1:04����������������������������������������������������������������

3547136/170498071 [..............................] - ETA: 1:04����������������������������������������������������������������

3948544/170498071 [..............................] - ETA: 59s ���������������������������������������������������������������

3973120/170498071 [..............................] - ETA: 1:01����������������������������������������������������������������

4341760/170498071 [..............................] - ETA: 58s ���������������������������������������������������������������

4513792/170498071 [..............................] - ETA: 58s���������������������������������������������������������������

4775936/170498071 [..............................] - ETA: 56s���������������������������������������������������������������

4956160/170498071 [..............................] - ETA: 57s���������������������������������������������������������������

5160960/170498071 [..............................] - ETA: 57s���������������������������������������������������������������

5447680/170498071 [..............................] - ETA: 55s���������������������������������������������������������������

5611520/170498071 [..............................] - ETA: 56s���������������������������������������������������������������

5996544/170498071 [>.............................] - ETA: 54s���������������������������������������������������������������

6184960/170498071 [>.............................] - ETA: 53s���������������������������������������������������������������

6438912/170498071 [>.............................] - ETA: 53s���������������������������������������������������������������

6701056/170498071 [>.............................] - ETA: 52s���������������������������������������������������������������

6807552/170498071 [>.............................] - ETA: 53s���������������������������������������������������������������

7028736/170498071 [>.............................] - ETA: 52s���������������������������������������������������������������

7102464/170498071 [>.............................] - ETA: 53s���������������������������������������������������������������

7323648/170498071 [>.............................] - ETA: 52s���������������������������������������������������������������

7593984/170498071 [>.............................] - ETA: 52s���������������������������������������������������������������

7766016/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

7921664/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

8175616/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

8265728/170498071 [>.............................] - ETA: 52s���������������������������������������������������������������

8454144/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

8683520/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

8871936/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

8994816/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

9117696/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

9486336/170498071 [>.............................] - ETA: 50s���������������������������������������������������������������

9625600/170498071 [>.............................] - ETA: 50s���������������������������������������������������������������

10010624/170498071 [>.............................] - ETA: 49s���������������������������������������������������������������

10018816/170498071 [>.............................] - ETA: 51s���������������������������������������������������������������

11034624/170498071 [>.............................] - ETA: 48s���������������������������������������������������������������

11198464/170498071 [>.............................] - ETA: 48s���������������������������������������������������������������

11395072/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

11558912/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

11747328/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

12091392/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

12271616/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

12509184/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

12673024/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

12697600/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

12959744/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

13123584/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

13197312/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

13271040/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

13639680/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

13852672/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

14172160/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

14245888/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

14303232/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

14508032/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

14524416/170498071 [=>............................] - ETA: 48s���������������������������������������������������������������

15032320/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

15106048/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

15409152/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

15630336/170498071 [=>............................] - ETA: 47s���������������������������������������������������������������

15958016/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

16154624/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

16375808/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

16654336/170498071 [=>............................] - ETA: 46s���������������������������������������������������������������

17096704/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

17170432/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

17555456/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

17891328/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

17997824/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

18137088/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

18251776/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

18366464/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

18489344/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

18702336/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

18890752/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

19054592/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

19349504/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

19439616/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

19562496/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

19939328/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

20013056/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

20439040/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

20471808/170498071 [==>...........................] - ETA: 46s���������������������������������������������������������������

21086208/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

21323776/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

21405696/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

21512192/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

21749760/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

21856256/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

22110208/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

22265856/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

22560768/170498071 [==>...........................] - ETA: 44s���������������������������������������������������������������

22626304/170498071 [==>...........................] - ETA: 45s���������������������������������������������������������������

22831104/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

22945792/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

23207936/170498071 [===>..........................] - ETA: 44s���������������������������������������������������������������

23265280/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

23666688/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

23846912/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

23945216/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

24109056/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

24305664/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

24371200/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

24510464/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

24600576/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

24788992/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

24879104/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

25051136/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

25313280/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

25403392/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

25608192/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

25886720/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

26058752/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

26238976/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

26419200/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

26615808/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

26746880/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

26951680/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

27058176/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

27230208/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

27418624/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

27688960/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

27795456/170498071 [===>..........................] - ETA: 46s���������������������������������������������������������������

28041216/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

28246016/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

28409856/170498071 [===>..........................] - ETA: 45s���������������������������������������������������������������

28606464/170498071 [====>.........................] - ETA: 45s���������������������������������������������������������������

28737536/170498071 [====>.........................] - ETA: 45s���������������������������������������������������������������

28958720/170498071 [====>.........................] - ETA: 45s���������������������������������������������������������������

29097984/170498071 [====>.........................] - ETA: 45s���������������������������������������������������������������

29409280/170498071 [====>.........................] - ETA: 45s���������������������������������������������������������������

29605888/170498071 [====>.........................] - ETA: 45s���������������������������������������������������������������

29720576/170498071 [====>.........................] - ETA: 45s���������������������������������������������������������������

29999104/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

30031872/170498071 [====>.........................] - ETA: 45s���������������������������������������������������������������

30310400/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

30474240/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

30703616/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

30785536/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

31014912/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

31096832/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

31358976/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

31563776/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

31612928/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

31801344/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

31940608/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

32202752/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

32325632/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

32555008/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

32833536/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

32997376/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

33226752/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

33406976/170498071 [====>.........................] - ETA: 43s���������������������������������������������������������������

33579008/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

33775616/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

33890304/170498071 [====>.........................] - ETA: 44s���������������������������������������������������������������

34226176/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

34480128/170498071 [=====>........................] - ETA: 43s���������������������������������������������������������������

34529280/170498071 [=====>........................] - ETA: 43s���������������������������������������������������������������

34586624/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

34922496/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

35127296/170498071 [=====>........................] - ETA: 43s���������������������������������������������������������������

35143680/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

35373056/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

35520512/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

35684352/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

35840000/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

35979264/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36020224/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36110336/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36274176/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36364288/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36478976/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36626432/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36659200/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36716544/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36864000/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

36962304/170498071 [=====>........................] - ETA: 44s���������������������������������������������������������������

37093376/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

37208064/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

37322752/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

37363712/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

37494784/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

37683200/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

37756928/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

37855232/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

37945344/170498071 [=====>........................] - ETA: 45s���������������������������������������������������������������

38035456/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38133760/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38223872/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38273024/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38371328/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38477824/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38567936/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38674432/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38780928/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38895616/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

38993920/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

39034880/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39182336/170498071 [=====>........................] - ETA: 46s���������������������������������������������������������������

39256064/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39354368/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39395328/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39469056/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39542784/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39616512/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39706624/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39780352/170498071 [=====>........................] - ETA: 47s���������������������������������������������������������������

39813120/170498071 [======>.......................] - ETA: 47s���������������������������������������������������������������

39903232/170498071 [======>.......................] - ETA: 47s���������������������������������������������������������������

39976960/170498071 [======>.......................] - ETA: 47s���������������������������������������������������������������

40067072/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40148992/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40239104/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40312832/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40386560/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40476672/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40558592/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40640512/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40722432/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40812544/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

40902656/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41000960/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41074688/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41164800/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41263104/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41353216/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41451520/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41541632/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41639936/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41730048/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41820160/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

41926656/170498071 [======>.......................] - ETA: 48s���������������������������������������������������������������

42008576/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42123264/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42188800/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42254336/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42336256/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42409984/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42483712/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42565632/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42639360/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42713088/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42795008/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42876928/170498071 [======>.......................] - ETA: 49s���������������������������������������������������������������

42942464/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43008000/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43073536/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43171840/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43253760/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43311104/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43376640/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43458560/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43540480/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43548672/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43638784/170498071 [======>.......................] - ETA: 50s���������������������������������������������������������������

43696128/170498071 [======>.......................] - ETA: 51s���������������������������������������������������������������

43753472/170498071 [======>.......................] - ETA: 51s���������������������������������������������������������������

43810816/170498071 [======>.......................] - ETA: 51s���������������������������������������������������������������

43819008/170498071 [======>.......................] - ETA: 51s���������������������������������������������������������������

43884544/170498071 [======>.......................] - ETA: 51s���������������������������������������������������������������

43950080/170498071 [======>.......................] - ETA: 51s���������������������������������������������������������������

43999232/170498071 [======>.......................] - ETA: 51s���������������������������������������������������������������

44007424/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44089344/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44138496/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44187648/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44236800/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44294144/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44351488/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44400640/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44449792/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44507136/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44564480/170498071 [======>.......................] - ETA: 52s���������������������������������������������������������������

44621824/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

44687360/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

44736512/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

44785664/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

44843008/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

44900352/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

44974080/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

45039616/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

45113344/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

45178880/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

45244416/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

45309952/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

45375488/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

45424640/170498071 [======>.......................] - ETA: 53s���������������������������������������������������������������

45498368/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

45563904/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

45637632/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

45703168/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

45776896/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

45858816/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

45883392/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

45940736/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46014464/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46080000/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46153728/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46227456/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46309376/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46383104/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46456832/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46546944/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46628864/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46694400/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46735360/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46825472/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46891008/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

46972928/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47063040/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47136768/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47210496/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47300608/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47382528/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47464448/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47554560/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47652864/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47734784/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47816704/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47906816/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

47996928/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

48087040/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

48177152/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

48275456/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

48373760/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

48398336/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

48480256/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

48578560/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

48644096/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

48685056/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

48775168/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

48865280/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

48971776/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49061888/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49127424/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49217536/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49315840/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49397760/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49455104/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49545216/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49610752/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49717248/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49831936/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

49938432/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50053120/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50167808/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50274304/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50380800/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50495488/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50601984/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50708480/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50823168/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

50929664/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

51044352/170498071 [=======>......................] - ETA: 55s���������������������������������������������������������������

51134464/170498071 [=======>......................] - ETA: 54s���������������������������������������������������������������

51224576/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

51347456/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

51462144/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

51576832/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

51691520/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

51814400/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

51929088/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52051968/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52174848/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52289536/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52420608/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52535296/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52658176/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52707328/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52813824/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

52928512/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53051392/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53174272/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53231616/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53370880/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53477376/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53600256/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53747712/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53821440/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

53903360/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

54026240/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

54157312/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

54288384/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

54460416/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

54591488/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

54697984/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

54722560/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

54861824/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

54992896/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55140352/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55312384/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55410688/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55508992/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55599104/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55697408/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55787520/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55820288/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55902208/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

55951360/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

56057856/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

56172544/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

56287232/170498071 [========>.....................] - ETA: 53s���������������������������������������������������������������

56360960/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

56426496/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

56483840/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

56590336/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

56655872/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

56721408/170498071 [========>.....................] - ETA: 54s���������������������������������������������������������������

56844288/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

56868864/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

56983552/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

57081856/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

57106432/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

57237504/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

57360384/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

57491456/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

57532416/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

57671680/170498071 [=========>....................] - ETA: 54s���������������������������������������������������������������

57794560/170498071 [=========>....................] - ETA: 55s���������������������������������������������������������������

57802752/170498071 [=========>....................] - ETA: 55s���������������������������������������������������������������

57819136/170498071 [=========>....................] - ETA: 55s���������������������������������������������������������������

57851904/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

57884672/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

57917440/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

57966592/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58007552/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58048512/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58089472/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58138624/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58187776/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58245120/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58277888/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58327040/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58384384/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58417152/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58441728/170498071 [=========>....................] - ETA: 56s���������������������������������������������������������������

58474496/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58507264/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58548224/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58572800/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58613760/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58654720/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58679296/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58712064/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58736640/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58761216/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58793984/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58834944/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58875904/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58900480/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58933248/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

58974208/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

59015168/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

59056128/170498071 [=========>....................] - ETA: 57s���������������������������������������������������������������

59088896/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59129856/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59170816/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59219968/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59260928/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59310080/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59359232/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59400192/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59424768/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59490304/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������

59514880/170498071 [=========>....................] - ETA: 58s���������������������������������������������������������������