Lesson 8: Generative Modeling

Generative Modeling

Generative modeling is an unsupervised learning approach with the goal of learning the underlying probability distribution of input data. A generative modeling involves learning a density estimation (probability distribution) and generating sample data with the probability distribution model.

Examples of generative models include:

- Autoencoders (AEs)

- Variational autoencoders (VAEs)

- Generative Adversarial Networks (GANs)

Autoencoders (AE)

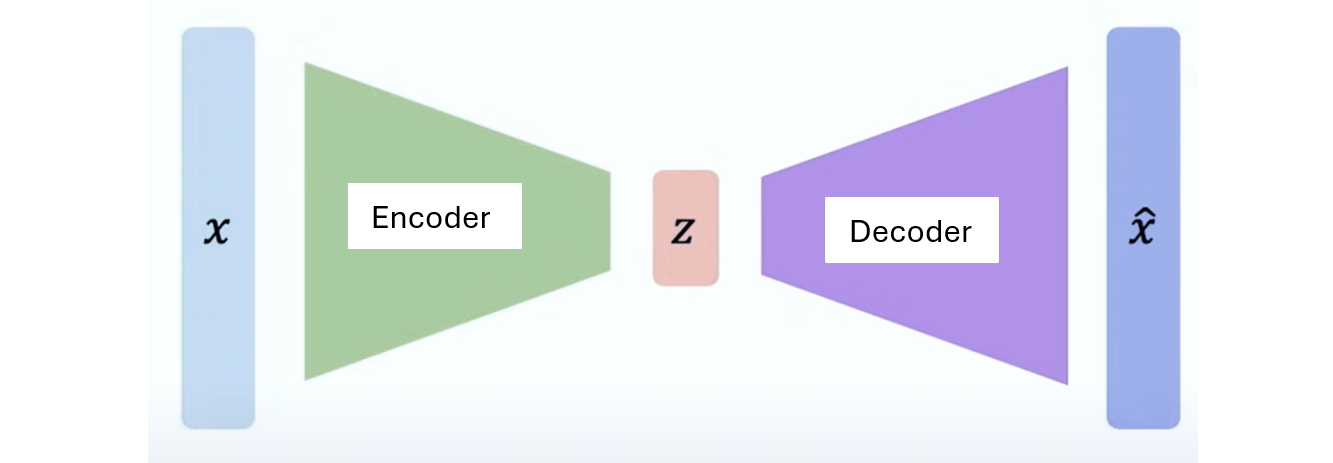

These are neural networks consisting of encoder and a decoder networks.

The encoder learns a network that maps an input \(x\) to a low dimensional latent representation \(z\).

The decoder takes the low dimension representation \(z\) and maps it to the original data \(x\). The decoder learns a network that reconstructs the original data \(x\) from \(z\). That is, the decoder learns to generate data \(\hat{x}\) that looks like the original input data \(x\).

Training objective: The goal of the training is to minimize reconstruction loss. A loss function, such as Mean Squared Error (MSE) is used. Backpropagation of gradient respect to the encoder and decoder weights is used to update the weights in the encoder-decoder model.

The term “autoencoder” arises from the fact that the network automatically encodes the data into a latent representation. The data is not manually encoded.

Variational Autoencoders

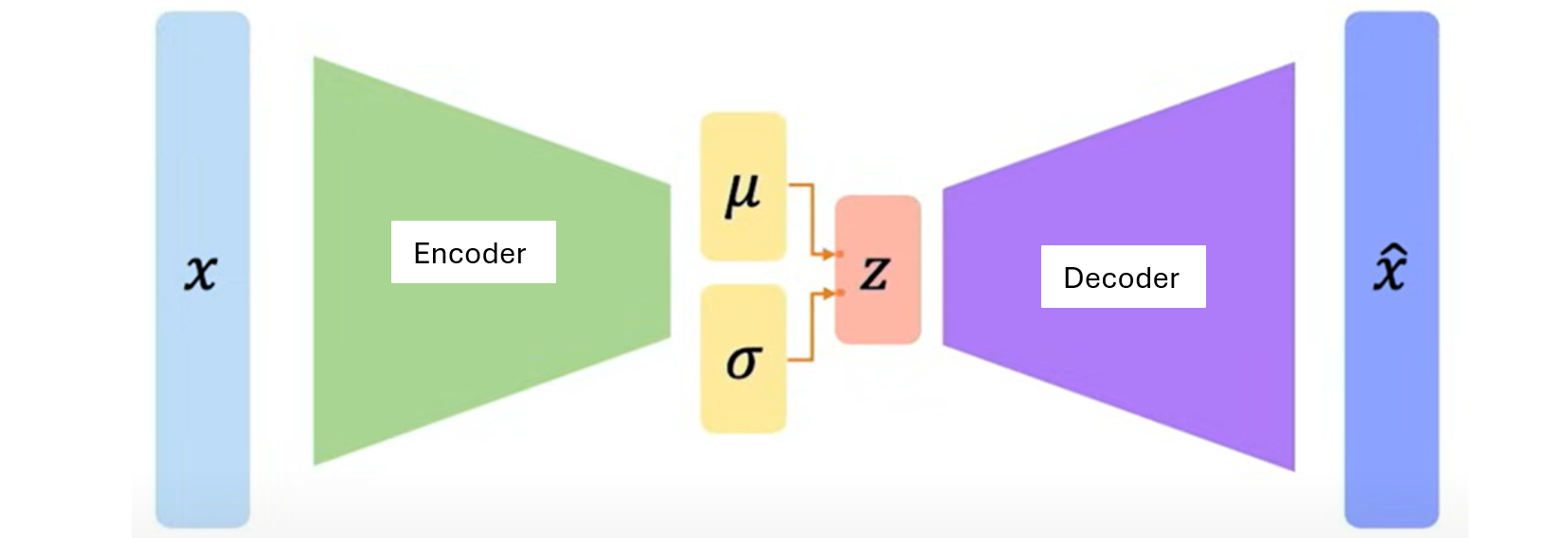

Autoencoders are deterministic which means after training an autoencoder, at inference time, when you pass input data into the autoencoder network, the same data will be generated as output without any variability. To introduce some variability in the output generated, we could use variational autoencoders. A variational autoencoder consist of encoder and decoder networks.

The encoder networks maps an input \(x\) to a lower-dimensional latent representation \(z\). The encoder network does not directly output \(z\). Instead the encoder network outputs the mean \(\mu\) and the standard deviation \(\sigma\) which are the parameters of the Gaussian distribution \(q(z∣x)\) for sampling \(z\).

The last hidden layer of the encoder network is transformed linearly into two vectors \(\mu\) and \(\sigma\). The idea is to use \(\mu\) and \(\sigma\) to obtain a sampling layer \(z \sim N(\mu, \sigma)\). However, if \(z\) is sampled directly from the normal distribution, we cannot backpropagate the gradient through the sampling layer. Sampling is a non-differentiable operation so we would not be able to find derivatives in the chain rule such as \(\frac{\partial z}{\partial \mu}\).

Instead, \(z\) is sampled using a reparameterization trick where \(z\) is computed as the sum of the mean \(\mu\) and the variance \(\sigma\) scaled by a random noise \(\epsilon\), sampled from a standard normal distribution:

\[ z = \mu + \sigma \odot \epsilon \] where:

\[ \epsilon \sim N(0, 1) \]

Note that the symbol \(\odot\) denotes element-wise multiplication.

The decoder network maps the sampled latent vector to an output \(\hat{x}\) that is as close as possible to \(x\).

The encoder network computes the parameters of the Gaussian distribution \(q(z|x)\) with the goal of sampling \(z\) from the distribution. The decoder network is a generative network that computes the conditional probability distribution \(P(x|z)\), the likelihood of generating \(x\) given \(z\) with the goal of reconstructing \(x\) from \(z\).

The training objective: The overall goal of a variational autoencoder is to learn a generative model of data that captures the underlying structure and distribution of the input data.

The training objective of the variational autoencoder is to minimize the objective function Evidence Lower Bound (ELBO) consisting of two parts:

- Reconstruction loss which measures the difference between \(x\) and \(\hat{x}\)

- KL divergence regularization term which measures the divergence between the learned latent distribution q(z∣x) and a prior distribution p(z), typically a standard Gaussian N(0,I).

The Evidence Lower Bound (ELBO) for a Variational Autoencoder is given by:

\[ \text{ELBO}(\theta, \phi; x) = \mathbb{E}_{q_{\phi}(z|x)}[\log p_{\theta}(x|z)] - \text{KL}(q_{\phi}(z|x) || p(z)) \]

where:

- \(\theta\) are the parameters of the decoder network,

- \(\phi\) are the parameters of the encoder network,

Generative Adversarial Networks

Generative Adversarial Networks or GANs allow us to generate samples that are good without focusing on the interpretability of the latent variable.

GANs do not directly model density but just sample from a learned latent representation of the data. This is because, if a distribution is complex, it’s difficult to model it directly.

GANs samples from something simple such as noise, then learns the network that maps the sample to the original data. A discriminator is then used to classify the fake output and the real data. The real data is then provided to the generator so the generator can generate data that is close to the real data.

Both the generator G network and discriminator network are trained when training GANs until the generator is able to produce data that is close to the real data.

GANs start from random noise or data generated from a normal distribution, and generates a data such as an image.